- Published on

Visit to Ugents datacenter

- Authors

- Name

- Tibo Gabriels

In October 2021, I had the awesome opportunity to visit the datacenter of UGent. Being the hardware geek I am, this change gave a lot to look forward to.

Grafana all the way

We started the visit in a conference room, where we were briefed about the general rules. Already I noticed an office with huge monitors displaying all sorts of graphs and statistics in a Grafana dashboard.

The hall of plenty servers

After this, we could finally enter the huge complex. We started in the first hall and were greeted to long rows of racks, filled with Dell servers. They were arranged in such a way that 2 rows facing each other, were sealed off in a controlled environment for temperature regulation, then that air was blown through the racks into the back, where the air was piped off to the datacenters cooling system. I had seen rack servers before, usually a bit smaller. But seeing the infrastructure built to keep the systems cool was quite… well, cool. And it gets better! But more on that later.

Tape storage: not that retro!

It seemed most of these racks were filled randomly, some storage servers here, some compute servers there. There probably was a logic to the layout, but I couldn’t figure it out. Making my way further in this first hall, I stumbled upon a huge metal box, wider than the racks before, that on further inspection appeared to be a tape storage system. Inside were racks full of magnetic tapes, but even cooler was the robot arm whizzing trough and grabbing tape after tape to read or write. While this may seem convoluted for a storage system. I mean, who uses tapes in 2021? And a robot arm?! In fact, it turns out that for long term archival storage, tape is still king! While the equipment to use them is expensive, compared to hard drives, tapes are very cheap, data-dense and very enduring. One downside is you can only do sequential operations, but for archival storage this is not an issue.

A small networking closet

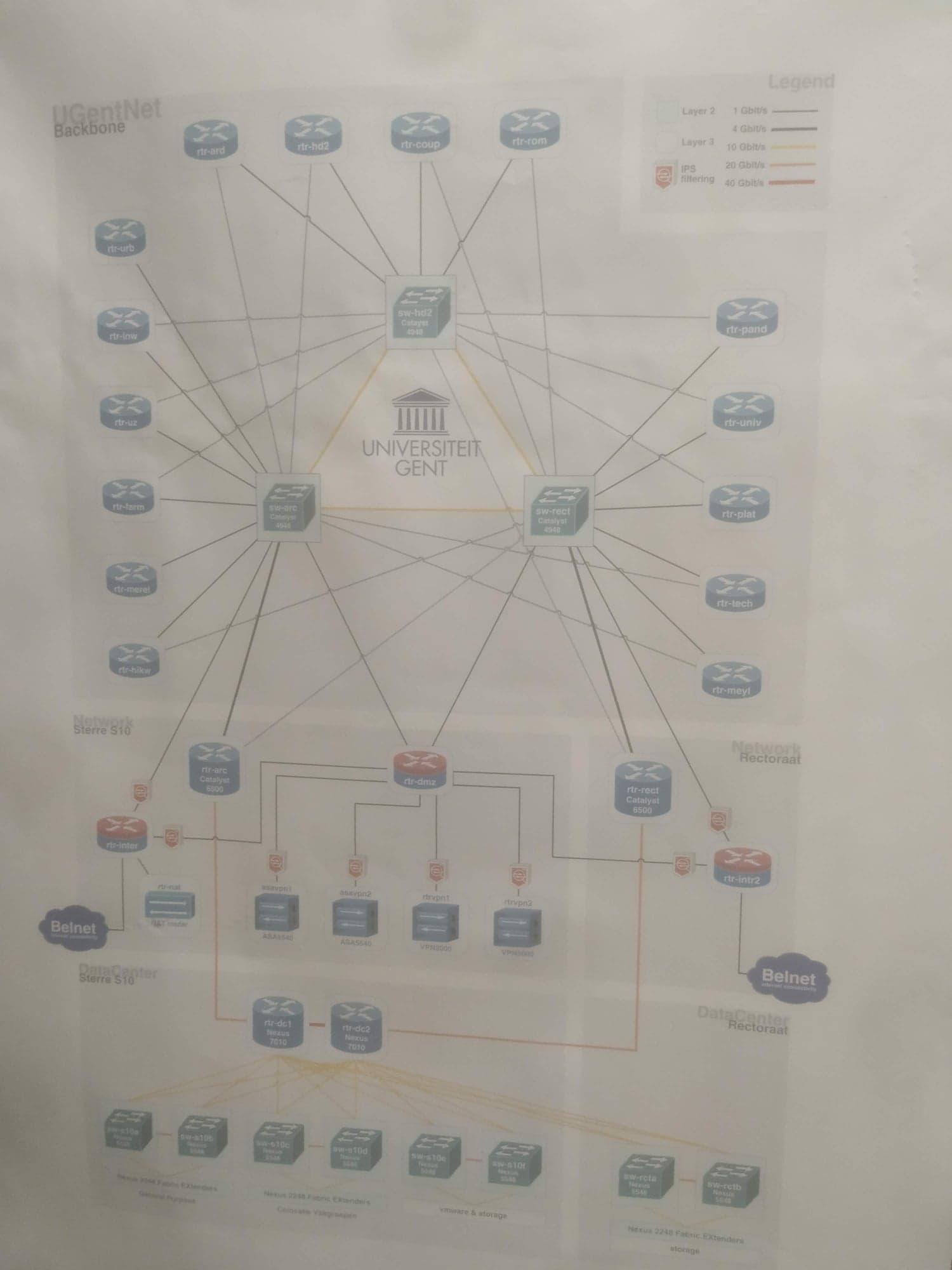

I could have stared at that robot arm for hours, but a whole datacenter of expensive toys was waiting to be discovered. I made my way to the second hall, about half the size of the first. This turned out to be the networking “closet” of the datacenter. Here we got a view of all the switches and routers that make UGent tick. Lots of fiber optics of course, but take a look at this:

Here we can see the actual network structure. A quick legend:

- Black: 1Gbit/s – 4Gbit/s

- Yellow: 10Gbit/s

- Orange: 20Gbit/s

- Red: 40Gbit/s

Wow! I wished I had that kind of networking at home! In the plan, you can clearly see the redundancy you expect at a professional institution. But even more interesting, perhaps: The 2 uplinks to the internet, are 1 Gbit/s.

Only 1 Gigabit per second.

You really would expect a datacenter would need more bandwidth but no, this is enough apparently. The advantage of keeping everything in house perhaps? Even still, it baffles me that this entire datacenter as well as a secondary datacenter can run on an internet cable the size of my pinkie finger!

Infinite power

Moving on, we visited what powers this entire site. High voltage switches, huge battery banks, the size of rack servers themselves, and huge cables the width of my leg bringing all this power to the rest of the facility. As you’d expect, everything is redundant. There are 2 separate battery grids, each capable of seamlessly powering the entire facility for a few minutes. That may seem very short, but remember that this entire complex consumes 1Gigawhatt of power. In fact, it’s built to be able to expand to double that. So yes, a few minutes may not be so insignificant. But more importantly, it is long enough for the backup generator to get started. At the end of the facility as a huge loading and storage section full of old and new hardware, but also a huge metal monster that sucks in air and runs on gasoline in case of a power outage. On the exterior wall there were metal vents that would collapse open in case the generator starts, just from the pressure difference!

How many megaflops?

After this we visited another computing hall, this one was still very much being assembled but what was already installed was amazing. This hall is dedicated to high performance computing, medical science being a big part of its workload. In contrast to the first hall, most of these servers were from Supermicro instead of Dell. If the first hall wasn’t already loud, this one was even louder! With even fewer servers that were installed.

Sunset in paradise

To finalize our visit. We went to the roof to watch the sunset. All the while admiring he multiply huge AC units used to cool this entire facility’s servers. Our guide mentioned they had to overhaul the system after a heatstroke exceeded its operating temperatures, which turned off the AC units. Quite frightening how a few degrees more are enough to cripple an entire datacenter.

Thick tubes covered in even thicker insulation moved warm water here to be cooled and moved away again in tubes labelled “ice water”. Funnily enough, the actual temperature was 24 degrees.

This visit was everything I could have wished for, the only thing that could have made it better is if I could have taken some old server home. But alas, they were bound for developing countries to help their universities. Oh well, I guess they deserve server to.